LLM Panels (deprecated)

Comet LMMOps' tools fall under three different categories:

- Prompt Playground: Interact with Large Language Models from the Comet UI. All your prompts and responses will be automatically logged to Comet.

- Prompt History: Track all your prompt / response pairs. You can also view prompt chains to identify where issues might be occuring.

- Prompt Usage Tracking: Granular view in token usage tracking.

Note

The LLM Panels will be deprecated in favour of LLM Projects. You can learn more about LLM projects here

Adding this tool to your projects¶

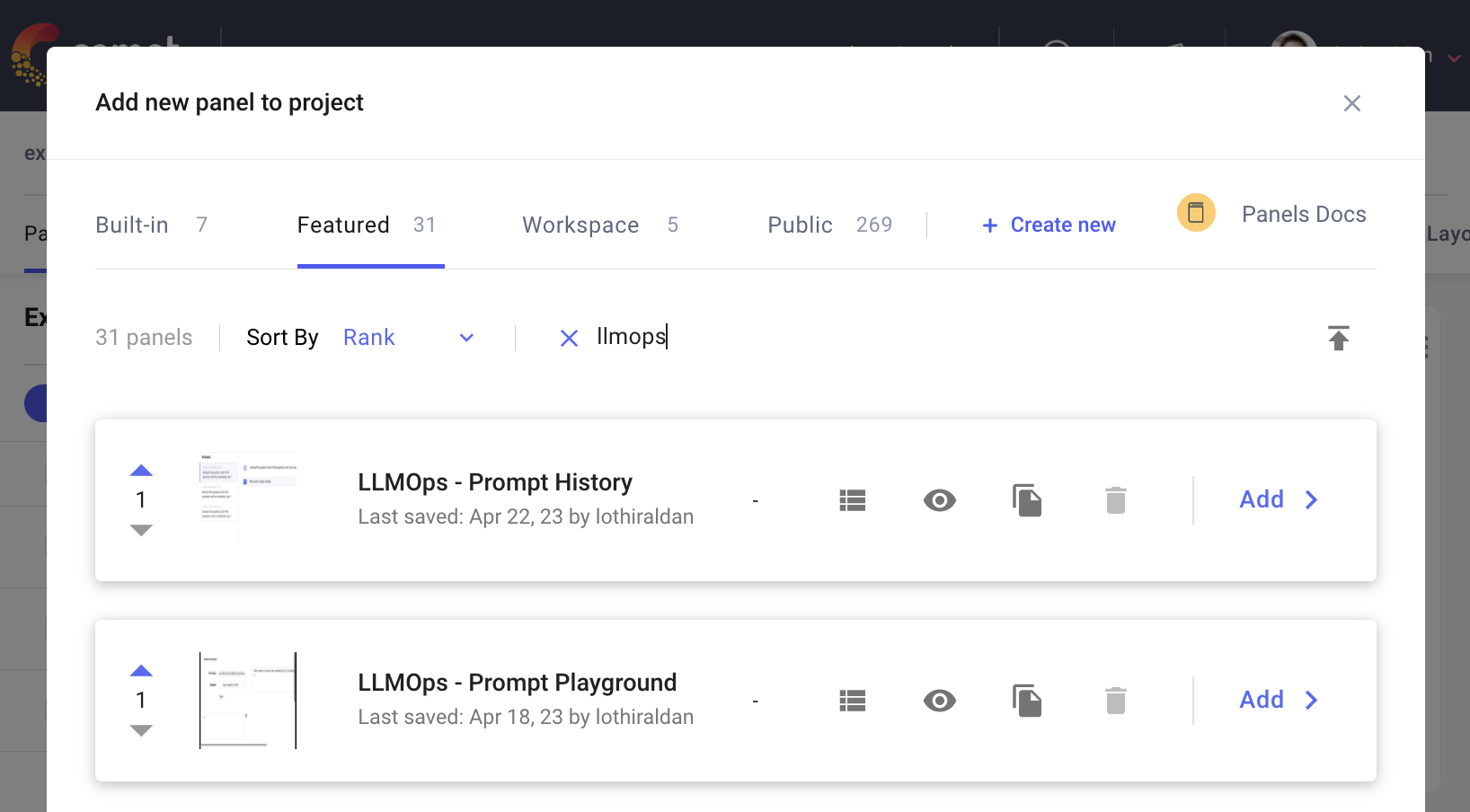

Comet's LLMOps tools are all available as panels which can be added to both your Comet projects or to any experiment. You can find all these panels by searching for llmops in the Featured Panels when adding a panel.

Prompt Playground¶

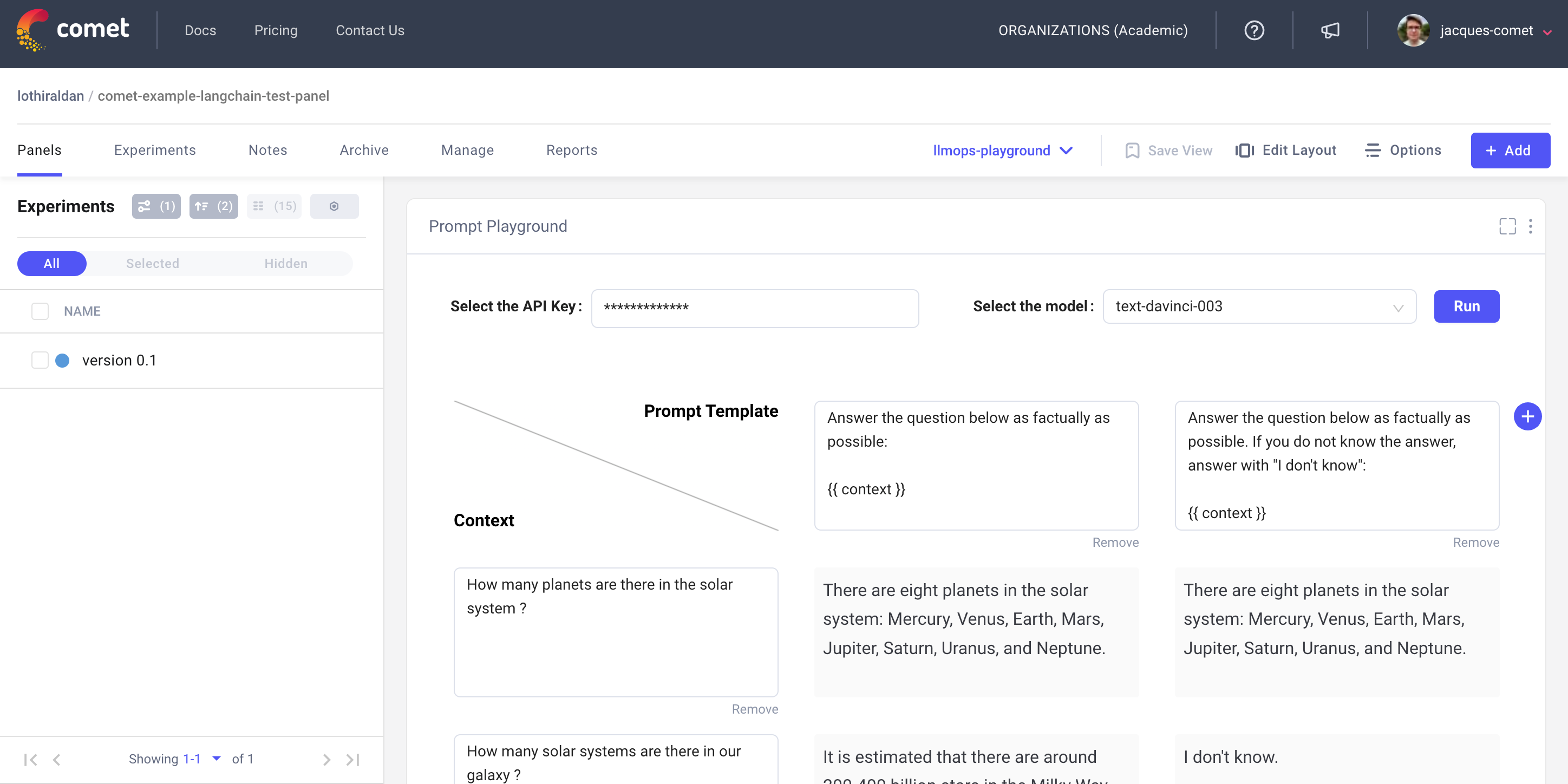

Comet's Prompt Playground allows you to query OpenAI's Large Language Models straight from your dashboard. All the prompts and responses will be automatically recorded so that you can review them at a later date using the Prompt History panel.

To add the Prompt Playground panel to one of your dashboards, simply navigate to the featured panel list and choose Prompt Playground.

The Prompt Playground has been designed to allow you to define many different prompt templates and have them evaluated against a set of contexts quickly. In the columns text fields, if you add the variable {{ context }} to the prompt, this will be replaced dynamically by the context defined on each row. When you click on Run, the prompts will be sent to the selected OpenAI model and you will be able to view the responses for each prompt template / context combinations within the panel.

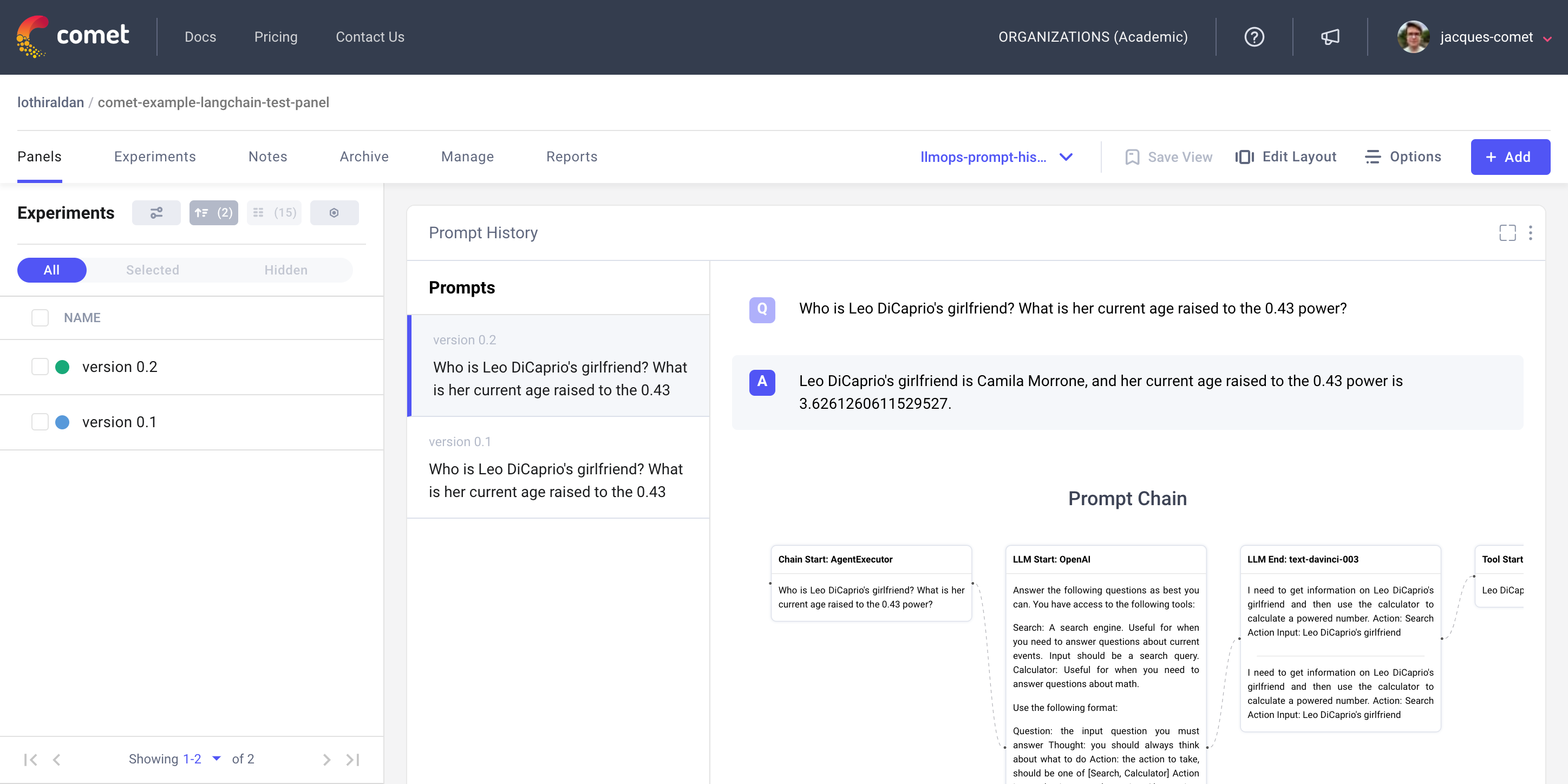

Prompt History¶

Prompt Engineering relies on the manual review on many different prompt / completion combinations. The Prompt History panel can be used to visualize these as well as visualizing the chains that lead to a given final response.

The Prompt History panel currently supports our LangChain and OpenAI integrations as well as the Prompt Playground panel.

Prompt Usage Tracking¶

Some of the best Large Language Models can only be accessed through paid APIs that are priced by token. When experimenting with many different use-cases, the costs associated with these experiments can become complicated to track. With the Prompt Usage Tracking Panel, you will be able to track daily token usage by project as well as view token usage by experiment run.

Logging data to use with the LLM Panels¶

Comet has integrations with both LangChain and the OpenAI SDK. When using these tools Comet will automatically save the prompts, responses, and chains in a format capatible with the LLM Panels.

The Comet LLM logging is made with just two lines of code.

Here is an example using LangChanin:

```python

import os

os.environ["COMET_API_KEY"] = "Your Comet API Key"

os.environ["OPENAI_API_KEY"] = "Your OpenAI API Key"

os.environ["SERPAPI_API_KEY"] = "Your SerpAPI API Key"

from langchain.agents import initialize_agent, load_tools

from langchain.callbacks import CometCallbackHandler, StdOutCallbackHandler

from langchain.callbacks.base import CallbackManager

from langchain.llms import OpenAI

comet_callback = CometCallbackHandler(

project_name="comet-example-langchain",

complexity_metrics=True,

stream_logs=True,

tags=["agent"],

)

manager = CallbackManager([StdOutCallbackHandler(), comet_callback])

llm = OpenAI(temperature=0.9, callback_manager=manager, verbose=True)

tools = load_tools(["serpapi", "llm-math"], llm=llm, callback_manager=manager)

agent = initialize_agent(

tools,

llm,

agent="zero-shot-react-description",

callback_manager=manager,

verbose=True,

)

agent.run(

"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?"

)

comet_callback.flush_tracker(agent, finish=True)

```

Here is an example using OpenAI:

```python

import comet_ml

import openai

experiment = Experiment(

api_key="YOUR_API_KEY",

project_name="YOUR_PROJECT_NAME",

workspace="YOUR_WORKSPACE",

)

openai.api_key = os.getenv("OPENAI_API_KEY")

openai.Completion.create(

model="text-davinci-003",

prompt="Say this is a test",

max_tokens=7,

temperature=0

)

```

Once the data is logged to Comet, you can analyze the prompts and chains using the LLMOps - Prompt History panel. This panel can be found in the Featured section and can be added at either a project or experiment level.